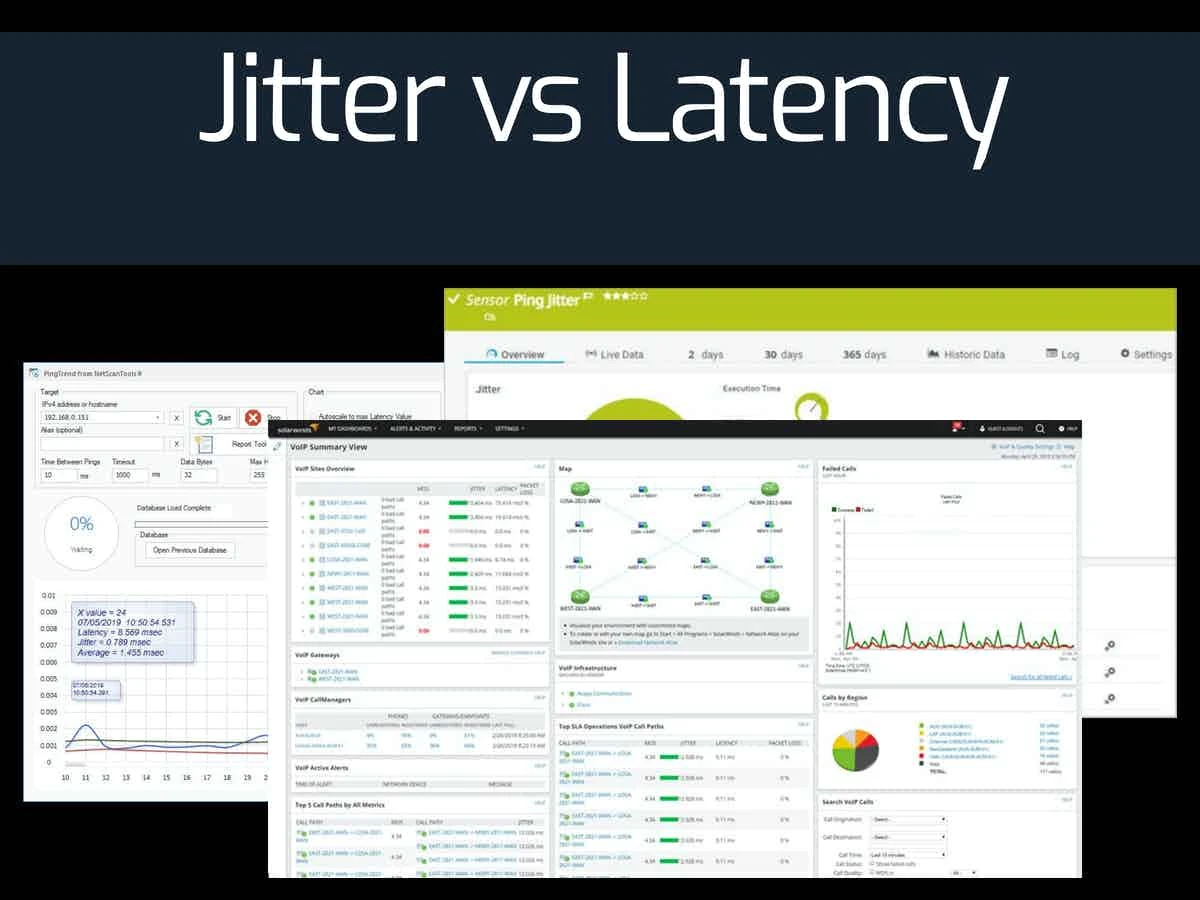

When it comes to network performance, latency, and jitter are often confused. But they are actually two very different concepts. Latency is the amount of time it takes for a packet of data to travel from one point on a network to another, while jitter is the variation in packet delays experienced by packets as they travel across a network.

Latency is typically measured in milliseconds (ms) and is usually expressed as “one-way” latency, meaning the time taken for a packet to travel from the sender to the receiver. It’s important to note that latency can vary depending on several factors including distance, type of connection, and even weather conditions. For example, if you’re transmitting data over a satellite connection or wireless connection, you may experience higher latency than if you were using an ethernet cable.

Jitter, on the other hand, measures how much variation (in milliseconds) there is between each of the packets sent over a network. This can be caused by things like congestion or interference from other signals. Jitter can also be affected by how many packets are being sent at once over the same route. To put it simply: jitter measures how much fluctuation there is in packet delay when sending data over a network.

When evaluating your network performance, it’s important to consider both latency and jitter since they can have major impacts on user experience and application performance. Ideally, you should aim for latencies below 30ms and no more than 1% packet loss with a maximum one-way latency of 150ms (300ms return). Additionally, it’s important to keep an eye on jitter levels so that traffic doesn’t suffer from excessive delays or drops due to fluctuations in transmission times.

while both latency and jitter measure different aspects of your network performance, they are both equally important when evaluating your network health and application performance. Understanding these two metrics will help ensure that your users have an optimal experience when using your services or applications!

Understanding Good Latency and Jitter

Good latency is the time it takes for data to travel from one point to another. It can be affected by many factors, such as distance and network congestion. An ideal latency should be no more than 150ms one-way (300ms return).

Jitter, on the other hand, is the variation in latency over time. It measures how consistent your connection is when transmitting data. A good jitter should be below 30ms to ensure a reliable connection. Additionally, a packet loss of no more than 1% is also desirable for a good connection.

Differences Between Delay Latency and Jitter

The main difference between delay latency and jitter is that latency is the amount of time it takes for a packet of data to travel from its source to its destination, while jitter is the variability in the amount of time it takes for each packet of data to reach its destination. Latency can be improved by using faster internet connections or by reducing the number of routers between the source and destination. Jitter can be prevented by using timestamps on packets so that they reach their destinations in a consistent manner.

Understanding Latency, Throughput, and Jitter

Latency is the amount of time it takes for a packet of data to travel from its source (e.g. a computer) to its destination (e.g. another computer). Latency is typically measured in milliseconds (ms). Throughput is the rate at which data can be transferred over a network, and is typically measured in bits per second (bps). Jitter is the variation in latency between packets of data as they travel over a network, and is typically measured in milliseconds (ms). It’s important to understand that latency, throughput, and jitter are all interrelated; for example, an increase in latency will often result in a decrease in throughput and an increase in jitter.

Can Latency Be Higher Than Jitter?

Yes, jitter can be higher than latency. Jitter is a measure of the variability in the latency of transmission, while latency is the average time it takes for a packet to travel from source to destination. Because jitter measures variance, it can easily exceed the average latency if there are short delays or large fluctuations in transmission times. To reduce jitter, network engineers may adjust routing protocols and monitoring systems to get more consistent delivery times.

Is 60 Milliseconds of Latency Considered Good?

Yes, 60ms latency is considered a good connection speed for gaming. Generally, an acceptable latency (or ping) is anywhere around 40 – 60 milliseconds (ms) or lower, while a speed of over 100ms will usually mean a noticeable lag in gaming. You should expect great performance with a latency of 60ms or lower.

Is 12ms Jitter Considered Good?

The short answer is yes, 12ms jitter is generally considered to be good. Jitter values below 30 ms are considered acceptable for most applications, so 12 ms of jitter is well within that range. This means that data and audio transmissions should not be significantly affected by the jitter. However, it’s important to keep in mind that certain applications may have different requirements for acceptable jitter values, so it may be worthwhile to double-check if you’re unsure.

Understanding 100ms Latency

Latency is a measure of how long it takes for data to travel from one point to another. 100ms latency, or ping, is the amount of time it takes for a signal (or data) to travel from your device to the hosting server, and then back again. This affects how quickly you can receive information from the server, which in turn affects your overall user experience. Low latency will make for smoother gameplay as there will be less lag. High latency causes delays when loading content, and can cause stuttering or even freezing during gaming.

Understanding Good Latency and Jitter for Gaming

Good latency and jitter for gaming is typically anything below 100ms, with 20-40ms being optimal. Jitter is a measure of the variability of latency over time, and it should be as low as possible to ensure smooth gameplay. Anything above 50ms can lead to lag, rubber-banding, or other issues that can make gaming unenjoyable.

Is 20ms Jitter Considered Good?

A jitter of 20ms is considered to be a good result and is generally considered to be the threshold for acceptable performance in real-time conversations. Having a jitter of 20ms or lower will ensure that your calls are clear and there won’t be any noticeable distortion. Anything above this value can lead to varying levels of audio quality degradation, depending on how high the jitter goes.

The Four Components of Latency

The four components of latency are 1) Processing Delay, which is caused by the processing speed of a device; 2) Queueing Delay, which occurs at nodes such as routers and switches; 3) Transmission Delay, which is caused by the bit-rate of transmission; and 4) Propagation Delay, which is due to the physical distance between two points. Processing Delay is typically the most significant contributor to overall latency, as it determines how quickly a device can process data. Queueing Delay occurs when there are too many requests for one node to handle at once. Transmission Delay relates to how long it takes for bits to travel from one point to another. Finally, Propagation Delay is caused by the physical distance between two points and is usually determined by the speed of light. All four of these components contribute to end-to-end latency and must be taken into account when measuring network performance.

Causes of High Latency and Jitter

High latency and jitter are caused by a variety of factors, including:

1. Network Congestion – This occurs when the capacity of a network link is exceeded, leading to an increase in the amount of time it takes for data packets to travel from one location to another. Network congestion can be caused by a variety of factors, including high traffic levels, inefficient routing algorithms, or overloaded hardware.

2. Improperly Provisioned Routers – If routers aren’t configured properly they may cause latency issues as packets have to wait longer than necessary at each hop along the way.

3. Distance – The further away two devices are from each other, the longer it will take for data to travel between them. This is due to the fact that packets must travel through multiple networks and routers before reaching their destination.

4. Physical Damage or Interference – An issue with any component along the path can cause latency and jitter issues as well as packet loss. This could be due to physical damage (such as cable cuts) or interference from outside sources (such as electromagnetic radiation).

5. Applications – Applications on either end of the connection can also impact latency and jitter if they aren’t configured correctly or aren’t optimized for performance.

Reducing Latency and Jitter

Latency and jitter can be reduced by making sure your network is properly configured and optimized. This means ensuring that your router is properly set up, that it is running the latest firmware, and that it is getting good signal strength from your internet provider. Additionally, you should check the speed of your connection with a bandwidth test to ensure that your connection is not being throttled by your ISP. You can also reduce jitter by upgrading to a better quality Ethernet cable, as well as attempting to use a different device for internet activities. If you’re using wireless networks, you can reduce latency and jitter by changing channels or switching to a different wireless network source. Lastly, try scheduling updates outside of business hours so they don’t interfere with the normal usage of the network.

Conclusion

In conclusion, latency and jitter are two important aspects of network performance. Latency is the time it takes for a packet to travel from its source to its destination while jitter is the variation in packet delay at the receiver of the information. Ideally, the jitter should be below 30ms and packet loss should be no more than 1%, and network latency shouldn’t exceed 150 ms one-way (300 ms return). To minimize latency and prevent jitter, it is important to use several internet connections and timestamps respectively. Throughput is also an important measure of network performance as it is the actual rate that which information is transferred.