Apple’s highly anticipated iOS 15 update has been making headlines for its controversial content scanning feature. The update introduces a Child Sexual Abuse Material (CSAM) detection technology that aims to protect children and prevent the spread of explicit content. However, this feature has sparked a heated debate about privacy and the potential for abuse. In this article, we will delve into the details of iOS 15’s scanning capabilities and the concerns raised by critics.

The CSAM detection feature in iOS 15 is designed to scan images sent or received through Apple’s Messages app. When a parent enables this feature for their child’s account, any images containing nudity or sexual content will be automatically blurred out. Additionally, the child will receive a popup warning, providing them with resources and information on how to handle such content.

Apple emphasizes that its CSAM detection capability is solely focused on identifying known CSAM images stored in iCloud Photos. These images have been identified by experts at the National Center for Missing and Exploited Children (NCMEC) and other child safety groups. Apple assures users that their private photos and messages are not being scanned or accessed by the company.

However, despite Apple’s assurances, concerns have been raised about the potential misuse and privacy implications of this scanning technology. Critics argue that such a system could be prone to false positives, leading to innocent images being flagged as explicit. There are concerns that this could result in unnecessary privacy breaches and mistrust among users.

Privacy advocates also worry about the implications of scanning user content for explicit material. They argue that this could set a dangerous precedent for further surveillance and government overreach. Additionally, there are concerns that this technology could be exploited by governments to target individuals based on their political views or other personal factors.

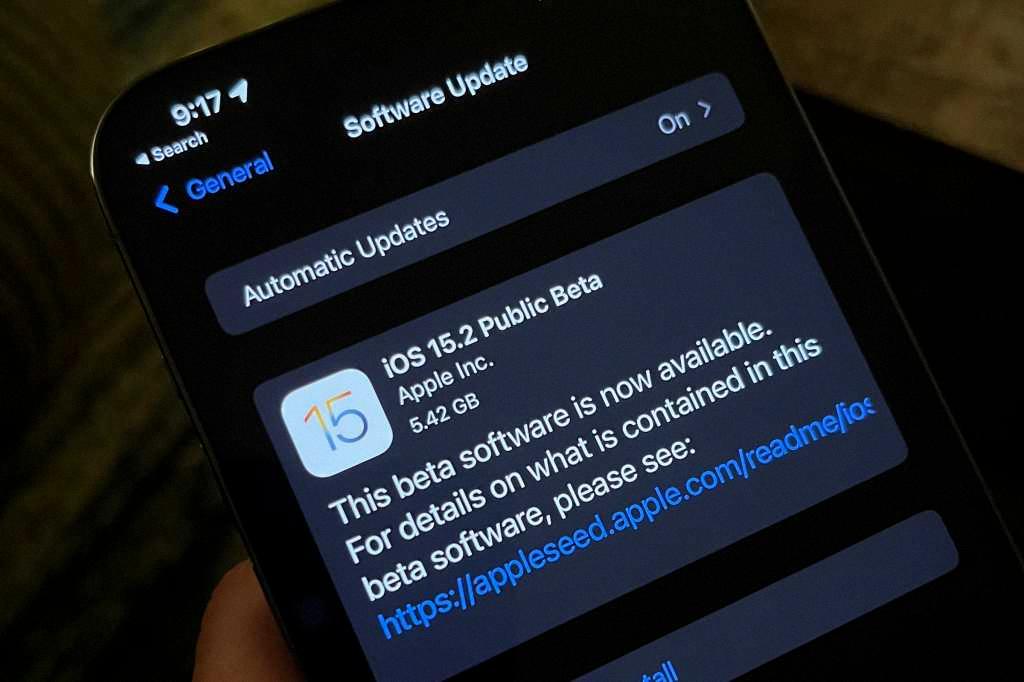

Apple initially planned to implement the CSAM detection feature in the iOS 15 update by the end of 2021. However, due to the significant backlash and feedback from customers, advocacy groups, and researchers, the company decided to postpone its implementation. After a year of silence, Apple recently announced that it has abandoned its plans for CSAM detection altogether.

The decision to abandon CSAM scanning demonstrates Apple’s willingness to listen to user concerns and prioritize privacy. The company acknowledged that the feature had raised legitimate concerns and that it needed more time to address these issues effectively. Apple is now exploring alternative approaches to child safety, focusing on education, communication, and intervention rather than content scanning.

Apple’s iOS 15 update initially introduced a controversial CSAM scanning feature designed to protect children from explicit content. However, the company has ultimately decided to abandon this feature in response to concerns about privacy and potential misuse. As technology continues to evolve, striking a balance between safety and privacy remains a challenging task for companies and society as a whole.

Remember, the information provided in this article is accurate as of the time of writing and is subject to change as Apple continues to develop its software updates. Stay informed and keep an eye out for any further announcements or developments regarding iOS 15 and its features.

Does iOS 15 Scan For CP?

IOS 15 includes a feature that scans images sent or received in Messages for nudity and sexual content, including child pornography (CP). This scanning functionality is only activated if a parent account enables it for a child account.

When an image containing explicit or inappropriate content is detected, it will be automatically blurred out by the system. Additionally, a popup warning will be displayed to the child, providing resources and guidance on what to do in such situations.

The purpose of this feature is to help protect children from exposure to harmful and illegal content. By proactively scanning images, iOS 15 aims to create a safer environment for young users while using the Messages app.

It’s important to note that this scanning specifically targets explicit and sexual content, including CP, and not general images or other types of content. The feature is designed to prioritize the well-being and safety of children online.

IOS 15 does include image scanning capabilities to detect nudity and sexual content, including CP, in Messages. This feature is enabled by a parent for a child account and serves as a protective measure to prevent exposure to explicit and illegal content.

Is It True That iOS 15 Will Scan Your Photos?

It is true that iOS 15 will include a photo scanning feature. However, it is important to note that this feature is specifically designed for parental control purposes and is not intended for general photo scanning or privacy invasion.

The photo scanning feature in iOS 15 is part of Apple’s Child Safety initiatives and aims to protect children from explicit content. When enabled, this feature scans the photos in the Messages app on children’s phones. It uses a technology called CSAM (Child Sexual Abuse Material) detection, which compares the photos on the device with a database of known explicit images.

If the scanning process detects any explicit content, the parents will receive a notification on their device, informing them about the presence of such content on their child’s device. It is worth noting that the actual photos are not directly viewed or accessed by Apple or any third party during this scanning process. Instead, the system uses cryptographic techniques to analyze the images and determine if they match known explicit content.

It is important to emphasize that this feature is designed with the intention of protecting children from explicit material and providing parents with the necessary tools to ensure their child’s safety. It is not intended to invade privacy or allow Apple to access personal photos without consent.

The photo scanning feature in iOS 15 is a parental control feature that scans photos in the Messages app on children’s phones for explicit content. It uses CSAM detection technology, compares the images with a database, and sends notifications to parents if explicit content is detected.

Does Apple Scan Your Photos For CP?

Apple does scan the photos stored in iCloud Photos for Child Sexual Abuse Material (CSAM). However, it is important to note that this scanning process is specifically designed to detect known CSAM images that have already been identified by experts at the National Center for Missing and Exploited Children (NCMEC) and other child safety organizations.

Here are some key points to understand about this scanning process:

1. Purpose: The primary objective of this scanning capability is to enhance child safety by identifying and reporting CSAM content that may be stored in iCloud Photos.

2. Detection Method: Apple’s system uses a technique called NeuralHash, which is a cryptographic technology designed to identify CSAM images without compromising user privacy. It generates a unique identifier for each image and compares it with a database of known CSAM images provided by child safety organizations.

3. Privacy Protection: Apple emphasizes that this scanning process is designed with user privacy in mind. The system operates on the user’s device, rather than on Apple’s servers, ensuring that the content of photos remains encrypted and inaccessible to Apple or any third parties. Additionally, the scanning is limited to iCloud Photos and does not extend to other data on the device.

4. Thresholds and Human Review: Apple has implemented strict thresholds to reduce false positives. Only when a certain number of matches are found for a particular account, the content is manually reviewed by a trained team at Apple. This human review process ensures that actual CSAM content is identified, and false positives are minimized.

5. Reporting to Authorities: If a confirmed match for CSAM content is found, Apple will report it to the appropriate authorities, such as NCMEC. This is in compliance with legal obligations and aims to aid in the prevention and prosecution of child exploitation.

It is important to understand that this scanning capability is focused solely on CSAM detection and does not involve scanning for other types of content or violating user privacy in any other way. Apple’s intention is to strike a balance between privacy and protecting children from exploitation.

Apple employs a scanning system in iCloud Photos to detect known CSAM images using cryptographic technology. The process is designed to prioritize user privacy and involves human review to ensure accuracy. The objective is to contribute to the fight against child exploitation by reporting identified CSAM content to appropriate authorities.

Does iOS 15 Have CSAM?

IOS 15 does not have CSAM (Child Sexual Abuse Material) detection. Initially, Apple had announced that CSAM detection would be implemented in an update to iOS 15 and iPadOS 15 by the end of 2021. However, the company later decided to postpone the feature based on feedback received from various stakeholders including customers, advocacy groups, and researchers.

After the initial announcement, Apple faced significant backlash and concerns were raised about privacy implications and potential misuse of the technology. As a result, Apple took the decision to reevaluate and address the concerns raised before proceeding with the implementation of CSAM detection.

Following the postponement, there has been no further update or indication from Apple regarding the implementation of CSAM detection in iOS 15 or any future updates. Therefore, as of now, Apple has abandoned the plans for CSAM detection in iOS 15.

Conclusion

The iOS 15 update introduced a controversial feature that involved scanning photos in the Messages app for explicit content. This feature was primarily aimed at providing parental control and ensuring the safety of children using Apple devices. However, due to significant backlash from customers, advocacy groups, researchers, and others, Apple ultimately decided to abandon the implementation of this CSAM detection feature.

The initial plan was to use a CSAM detection technology to scan images sent or received in Messages for nudity and sexual content. If such content was detected, the image would be blurred out, and the child would be presented with a warning popup along with resources on what to do. This move was seen as a proactive step towards preventing the circulation of harmful content and protecting vulnerable users.

However, concerns were raised about the potential privacy implications and the risk of false positives. Critics argued that this scanning feature could lead to an invasion of privacy and create a slippery slope towards increased surveillance. Additionally, the fear of false positives and the possibility of innocent images being flagged as explicit content raised concerns about the accuracy and reliability of the scanning technology.

As a result of the feedback received, Apple made the decision to abandon the CSAM detection plans. While the intention behind the feature was commendable, the concerns raised by various stakeholders highlighted the need for a more nuanced approach to address the issue of online safety without compromising privacy.

Moving forward, it is important for technology companies like Apple to strike a balance between protecting users, particularly children, and respecting their privacy. The challenge lies in finding innovative solutions that prioritize safety while minimizing the potential for abuse or infringement on individual rights. This incident serves as a reminder of the importance of open dialogue and collaboration between tech companies, advocacy groups, researchers, and users to ensure the development of responsible and effective safety measures in the digital age.